On Responsible Artificial Intelligence and Risk Management – Fundamentals and Impacts on Tax Administrations

Introduction

The use of Artificial Intelligence (AI) techniques is growing in society, applied in the most different fields of activity, with the potential to transform the community and people’s lives. The recent launch of the ChatGPT product and the expectation for the even more powerful GPT-4 [1] managed to involve different segments of society, with more than 100 million active accounts as of January, the publication of thousands of articles and an impressive increase in activities in discussion forums on the topic. This great participation highlighted discussions, previously reserved for groups of specialists and social scientists, about the role of humans in the society of the future and the risks associated with the use of these technologies. In the end, the increasingly expanded use of AI impacts individuals, groups, organizations, communities, society, the environment and the planet. Risks, associated with these impacts, can take many forms.

The tax administrations, inserted in these environments, quickly identified important AI applications to promote improvements, some possibly disruptive, in their different segments of action, such as attention to taxpayers, audits, collection, etc., starting to evaluate and test these uses.

Like any new technology, AI techniques will not have a uniform advance. The consulting firm Gartner proposed a model to evaluate the birth and spread of technologies, called “Hype Cycle for Emerging Technologies”[2], by which the trend of use of a new technology can go through 5 phases during its adoption cycle: (1) Innovation trigger, when a technology emerges and the expectations of its applicability begin to grow; (2) Peak of inflated expectations, when the expectations of applicability reach their peak; (3) trough of disillusionment, when many expectations of use disappoint; (4) slope of enlightenment, when the most well-founded expectations are identified and worked on; (5) Plateau of productivity, when expectations are transformed into products for solidified use. The figure below presents the Hype Cycle for AI referring to the year 2022, with the behavior of several technologies potentially applicable to tax administrations. For details, see [3].

Source: Gartner Group

Responsible AI

The U.S. National Institute of Standards and Technology – NIST, states that “AI systems are inherently sociotechnical in nature, meaning that they are influenced by social dynamics and human behavior. The risks and benefits of AI can arise from the interaction of technical aspects combined with social factors related to the way a system is used, its interactions with other AI systems, who operates it and the social context in which it is implemented.” “Without the right controls, AI systems can amplify, perpetuate, or exacerbate inequitable or unwanted outcomes for individuals and communities. With the right controls, AI systems can mitigate and manage inequitable outcomes.”

These impacts are most visible to tax administrations, which are governed by the fundamental principles of tax law [4]. Therefore, the use of AI must also observe rules or codes of conduct appropriate to compliance with these principles, in addition to dealing with the associated risks.

In this general context, the concept of “Responsible AI” (Responsible AI”) is defined as the practice of designing, developing and implementing AI systems with the good intention of empowering employees and companies, and fairly impacting customers and society, enabling institutions to build trust and scale AI with consistency [5].

To respond to all these requirements, an adequate management of the life cycle of AI systems must be established, especially the risk management.

The US government, the ISO/IEC and the OECD [6], most visibly, have been working on these urgent issues, in order to provide a framework for companies and government to develop low-risk AI systems.

These initial orientations will certainly provide the basis for similar institutional and governmental frameworks. In this way, tax administrations will also have an initial scenario established to organize their internal structures to mitigate these risks and generate reliable and adequate AI systems.

The first formalized Framework comes from NIST [1] and is presented here under.

The Risk Management Framework for Artificial Intelligence (AI RMF) [7]

This Framework, developed by NIST with sponsorship from the US government [8], aims to be universally applicable to all AI technologies, in all sectors. It was published in January 2023 in conjunction with other related documents, such as an animated playbook that organizes and graphically streamlines the Framework components and its implementation.

The first part of the document deals with the fundamental concepts and information that serve as a basis. The second part describes the core of the Framework in its four components. The third part contains annexes detailing the concepts and functions used.

The Framework deals with the use of equitable and responsible technologies, with social responsibility and sustainability. In addition, it was designed – according to its applicants – to equip organizations and individuals, called AI actors, with approaches that increase the reliability of systems and help foster the design, development, implementation and responsible use of AI systems over time.

The potential harms associated with AI systems were identified based on the following examples:

The Framework proposes to be flexible, adaptable and expand risk practices aligned to laws, regulations and standards, in addition to articulating characteristics of a trustworthy AI system and proposing directions to address them. These features would result in a system that is: valid and reliable; safe; protected and resilient; accountable and transparent; explainable and interpretable; with enhanced privacy; and with controlled harmful biases (¡ambitious!)

Some organizations, such as tax administrations, have established definitions and guidelines for risk tolerance, which can be expanded to cover the new challenges posed by the use of AI.

Based on these definitions, the AI RMF can be used to manage risks and document risk management processes.

How are the risks of AI different from the risks of traditional software?

An important aspect of the risks associated with the use of AI systems is the emergence of new risks and the exacerbation of old ones, relative to the risks of traditional software, which is summarized below based on the Appendix B from the AI RMF:

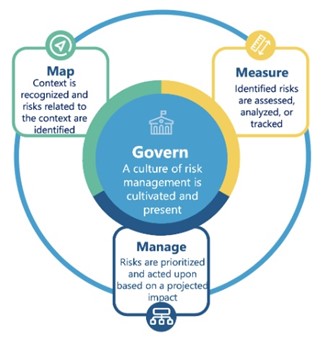

Composition of the AI RMF

The core of the AI RMF is organized into four functions, as shown below, subdivided into categories and subcategories.

Source: AI RMF Playbook

Governance (Govern):

It is a transversal function, which is disseminated throughout risk management and enables the other functions of the process. Aspects of governance, such as those related to compliance or evaluation, should be integrated into other functions.

Mapping (Map):

The MAP function establishes the context to frame the risks related to an AI system. It covers the establishment of the context (objectives, expectations, actors, risk tolerance, etc.), categorization of the system (methods used, models, limits, how system will be used, scientific integrity, VTE [Test, Evaluation, Validation & Verification], etc.), capabilities and others (benefits, usage, objectives, costs, scope, technology, legal risks, etc.).

Measuring (Measure):

Employs quantitative, qualitative, or mixed-methods tools, techniques, and methodologies to analyze, evaluate, compare, and monitor AI risk and related impacts.

Managing (Manage):

The Manage function involves the allocation of resources to mapped and measured risks on a regular basis and as defined by the Govern function. Risk management includes plans to respond, recover and communicate about incidents or events.

How to apply the AI RMF?

NIST provides a draft version of the Interactive User Manual for Implementation of the AI RMF Framework (AI RMF Playbook), based on researched best practices and trends. It covers the four defined functions.

This preliminary version is simple, but you can sort the actions and priorities in the implementation of the Framework.

It is available at https://pages.nist.gov/AIRMF/

The OECD proposal

The OECD has published last February the report “Advancing accountability in AI: governing and managing risks throughout the lifecycle for trustworthy AI” [9]. The concept of “trustworthy AI” requires the actors of the AI system to be answerable for the proper functioning of their systems, according to their function, context and ability to act.

This report presents research and findings on responsibilities and the risks in AI systems, providing an overview of how risk management frameworks and the AI system lifecycle can be integrated to promote reliable AI. It explores processes and technical attributes and identifies tools and mechanisms to define, evaluate, treat and control risks at each stage of the AI system lifecycle.

As can be seen, these are purposes very close to those indicated by the AI RMF Framework of NIST. Such a coincidence was to be expected, given that these two institutions (and others) cooperate in working groups on the subject and have aligned objectives, also using the conceptualization proposed in the general standard ISO 31000.

The figure below presents a comparative diagram of how the NIST FMR AI and the OECD models are structured. The descriptive content of the levels is not exactly the same, but the purposes are aligned.

AI RISK MANAGEMENT FRAMEWORK

Comparative structural vision (summary).

| NIST | OECD | |

| Govern | Govern | Monitor, document, communicate, consult, incorporate |

| Map | Define | Scope, context, action, criteria |

| Measure | Assess | Identify and measure AI risks |

| Manage | Treat | Prevent, mitigate of block AI risks |

The OECD intends to add a range of “policies” to the model, with the support of the other collaborators.

In addition, they intend to publish in 2023 a catalog of tools to guide the construction of a trustworthy AI system, with an interactive collection of resources for the development and implementation of AI systems that respect human rights and are fair, transparent, explainable, robust and secure.

Final comments

The AI systems present different and broader challenges than traditional information systems. Many successful applications have already been developed, but the evaluated potential is huge, still unexplored and untapped, as well as the associated risks. In Gartner’s Hype Cycle, the identified AI technologies observed are, however, mostly located in the “innovations trigger” and the “peak of inflated expectations” phases. A lot of research, tests and developments on possible use cases must still be carried out. Successes and failures will happen. But everything indicates that AI systems will be increasingly present in our lives and, especially, in tax administrations.

Risk management concepts and practices are not foreign to tax administrations. Thus, as strategic users of these technologies, which are advancing rapidly, it is important for tax administrations to stay connected with the ongoing initiatives of managing the life cycle of AI systems, especially risk management, in order to organize and adapt from the beginning with the best and most convenient practices.

*-*-*-*-*-*-*-*-*-*-*-*-*-*-

[1]Available at: https://openai.com/blog/chatgpt and https://www.technologyreview.com/2023/03/14/1069823/gpt-4-is-bigger-and-better-chatgpt-openai/

[2] Available at: https://www.techtarget.com/whatis/definition/Gartner-hype-cycle#

[3] Available at: https://www.gartner.com/en/articles/what-s-new-in-artificial-intelligence-from-the-2022-gartner-hype-cycle#

[4] Example of fundamental principles of tax law: compulsory, destination of public expenditure, proportionality, fairness, legality, generality (see example in https://blogs.ugto.mx/contador/clase-digital-4principios-fundamentales-del-derecho-tributario/)

[5] Available at: https://www.accenture.com/dk-en/services/applied-intelligence/ai-ethics-governance

[6] NIST (National Institute of Standards and Technology, Department of Commerce of the United States Government); ISO/IEC (International Organization for Standardization / International Electrotechnical Commission); OECD (Organisation for Economic Co-operation and Development)

[7] AI RMF – Artificial Intelligence Risk Management Framework. Available at: https://doi.org/10.6028/NIST.AI.100-1

[8] Available at: https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

[9] Available at: https://read.oecd.org/10.1787/2448f04b-en?format=pdf

4,940 total views, 13 views today

1 comment

I wanted to take a moment to thank you for this well-researched and informative post. It’s evident that you’ve put a lot of effort into providing accurate and helpful content. Your work is greatly appreciated!